I recently watched an excellent talk from Dr. Tom Goldstein given to the National Science Foundation in which he discussed the current limitations of machine learning (ML) research and a path forward to correct those issues. The fundamental thrust of his argument — that ML research needs to focus more on experimentation and less on theory — addresses many of the shortcomings in machine learning research and taps on several interesting ideas in theories of the mind, complex systems, and the development of true artificial intelligence (AI).

Taking Lessons from Science

In the current fundamental ML research paradigm, experiments tend to be informed by theory. In particular, many researchers attempt to advance machine learning through a math-style research process, in which new theorems are deduced logically from existing theorems, lemmas, and corollaries in the machine learning corpus of knowledge. Experimental studies then attempt to validate these theories, potentially using a toy dataset to demonstrate the theory’s predictions. In this paradigm, it is unacceptable to publish experimental results that are not supported by theory. In Dr. Goldstein’s talk, he gives examples of two papers he worked on which produced surprising and counterintuitive results but were based upon empirical experimentation rather than rigorous proof. As a result, both papers struggled to be accepted at reputable conferences. However, theoretical results which are contradicted by experimental evidence tend to still be published despite the apparent inconsistency.

By contrast, the experiment-based approach used in science inverts the hierarchy in machine learning. Theory becomes subservient to experiment — the goal of theory switches to explaining what we observe in the real world. Theory is useless if it does not align with already-existing experimental results, and previously accepted theories are tossed out if new experimental results refute them. Porting this paradigm to machine learning research would result in a landscape where most progress comes from attempting new ideas on real-world datasets. Theories would then retrospectively attempt to tie together experimental results from trying new network architectures, hyperparameters, and preprocessing techniques, developing an explanation for the results that we had already verified empirically. This would not only produce theories that are more consistent with how machine learning operates in the real world, but it would also unshackle applied machine learning progress from the constraints of existing theory. Fundamental research would now be directly oriented towards demonstrating new ideas empirically on real-world datasets, further accelerating in the application of machine learning.

While very appealing, this line of thought does raise a key question: why is machine learning research better suited for experimentation-based methods rather than theory-based methods?

Complexity Theory

Before we dive into why top-down theories of machine learning are so difficult to construct through deductive logic, let’s take a brief detour into complexity theory. According to Wikipedia, complexity is defined as follows,

Complexity characterizes the behavior of a system or model whose components interact in multiple ways and follow local rules, meaning there is no reasonable higher instruction to define the various possible interactions.

Essentially, complex systems are built upon agents acting under rather simple rules. These agents are typically constrained by distance, available information, or other limiting factors. As a simplified example, think about the interactions between people in an economy. Each person’s actions are constrained by their geographic setting, their limited knowledge of the world around them, and their available resources. If we consider an economy with no availability to credit, each agent’s actions essentially consist of buying or selling goods & services within this framework of constraints. While the action space (buy & sell) is rather small and the constraints placed upon each individual agent (knowledge, geographic distance, available resources) limit the scope of their actions significantly, the interactions between the agents and their decisions produce massively complex economies. To make it more concrete, while describing the economic decisions available to a merchant or farmer in the Roman Empire might be rather straightforward, describing the economic machine of Rome, which essentially amounts to interactions between many merchants and farmers, is an extremely difficult task.

This property of complex systems, in which highly complex behaviors develop from the interactions between constrained agents operating from a small set of possible actions, is known as emergence. This behavior makes describing complex systems in terms of top-down theory extremely difficult, if not impossible. These systems must be defined in terms of the interactions between their constituent parts — only then does the global behavior become clear. This is a key component for the “intelligent” behavior of many systems we observe in nature and is essential to understanding the emergent properties of neural networks.

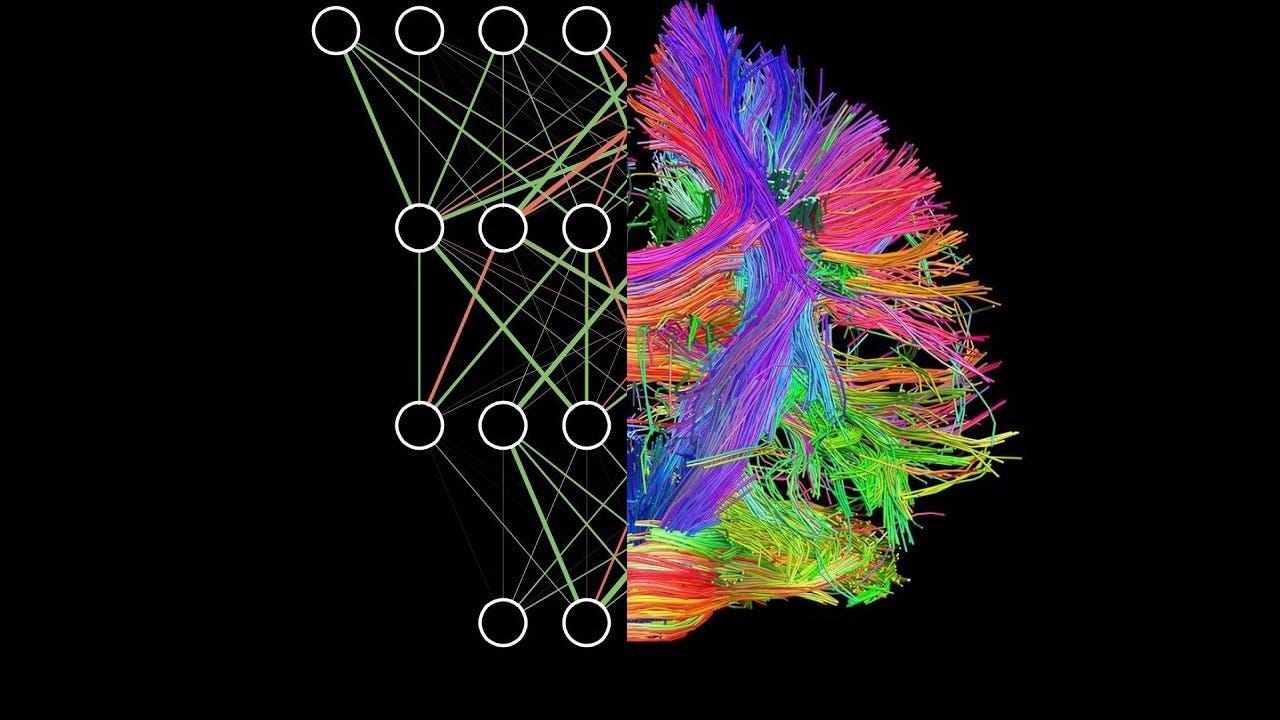

Biological & Artificial Neural Networks as Complex Systems

The brain, the most powerful thinking machine known, consists of about 86 billion neurons. Each individual neuron behaves rather simply — it receives input from its environment in the form of pressure, stretch, chemical transmitters, and changes of the electric potential across the cell membrane. This input then determines whether the neuron “turns on” or not. That is, the voltage of the cell membrane rapidly rises and falls, creating an electrical spike in response to the input. The key piece of the brain, and the reason why neurons are not simple voltage spiking machines, is that each neuron is connected to thousands of other neurons through synapses. In this way, the electrical spikes that occur in one neuron propagate to thousands of others, either inhibiting or facilitating spikes in those neurons. In turn, those neurons’ signals propagate to other neurons, creating a cascade of neural activation.

These cascades of neural activation create complex behavior, such as your ability to read this article while simultaneously being conscious of yourself, your thoughts, and your emotions, despite starting from a rather simple process — that of the activation of a single neuron. This is best captured by Bassett and Gazzaniga in their 2011 paper Understanding Complexity in the Human Brain:

Perhaps most simply, emergence — of consciousness or otherwise — in the human brain can be thought of as characterizing the interaction between two broad levels: the mind and the physical brain. To visualize this dichotomy, imagine that you are walking with Leibniz through a mill. Consider that you can blow the mill up in size such that all components are magnified and you can walk among them. All that you find are mechanical components that push against each other but there is little if any trace of the function of the whole mill represented at this level. This analogy points to an important disconnect in the mind–brain interface: although the material components of the physical brain might be highly decomposable, mental properties seem to be fundamentally indivisible.

When we zoom into a single neuron, the functionality appears fairly straightforward, but the emergent properties of the mind are completely obscured. It is the interactions between massive numbers of neurons that drive the highly complex behavior exhibited by humans and other animals with large numbers of interacting neurons and neural connections. In this way, top-down theories of intelligence and brain function have been thwarted — developing a compact theory of brain function is akin to developing a compact theory describing the interactions of hundreds of millions of people in the US economy.

Similarly, artificial neural networks are composed of artificial neurons, loosely based on their biological equivalents. Connections between neurons in the network are established, similar to synapses in the human brain, allowing the artificial neurons to transmit signals to one another. Deep neural networks, the most successful example of machine learning in the field today, stacks multiple layers of neurons between the input and output layers. These additional layers allow for massive numbers of neurons and connections (the language model GPT-3 has about 175 billion parameters, roughly corresponding to the number of neurons and connections available in the model). These huge networks, as in the case of biological neural networks, exhibit intelligent behavior despite relatively simple components. This is captured by Testolin, Piccolini, and Suweis in their 2018 paper Deep Learning Systems as Complex Networks,

…in deep learning even knowing perfectly how a single neuron (node) of the network works does not allow to understand how learning occurs, why these systems work so efficiently in many different tasks, and how they avoid getting trapped in configurations that deteriorate computational performance. In these models, interactions play a crucial role during the learning process, therefore a step forward toward a more comprehensive understanding of deep learning systems is their study also in terms of their emerging topological properties.

The neurons themselves are governed by quite simple laws, but the interactions between those neurons produce incredibly complex behavior, such as automatic speech-to-text programs, self-driving cars, and facial recognition software. Given what we know about complex systems and the property of emergence, it seems reasonable that deep neural networks would be difficult to describe without accounting for the interactions between the billions of neural connections that make up the network. The quest for top-down theories produced from deductive logical reasoning may be fruitless in this complexity-laden case.

Experimental Research as a Solution to Understanding Complexity

Now, returning to our original topic, we can begin to see how experimental methods address the fundamental issues with understanding complex systems. Deep neural networks and other machine learning methods based on large-scale interactions between simple components do not lend themselves well to top-down theoretical understanding. However, we can tease out the emergent behavior of these systems by using real-world datasets, choosing a particular behavior we would like to examine, and constructing experiments to understand the emergent behavior of the network in question.

For example, we may construct experiments to see how the number of iterations it takes for the weights of a convolutional neural network to converge changes as the quality of the image dataset increases or decreases. While this experimental result would not explain fundamentally why the network’s convergence rate behaves the way it does, it provides us insight into the emergent behavior of the complex system — namely, the convolutional neural network itself. With enough experimental results of this nature, we may be able to piece together insights to begin understanding the behavior of these networks and how they respond to varying stimuli. I believe this type of understanding as put forth by Dr. Goldstein, while lacking from the perspective of theoretical explanations and justifications, will facilitate the way forward for significant improvements in machine learning, both in academia and industry.